12.02.2026

As Gartner predicts, the majority of all corporate data processing now takes place outside central data centers, a clear indication of the growing importance of distributed intelligence at the edge of the network.

At the same time, market research is forecasting strong growth in the edge AI market. Studies by Fortune Business Insights and other analyses show that the market for AI-based edge solutions is expanding at high growth rates. Driven by the increasing demand for real-time processing, Industry 4.0 use cases such as autonomous production lines and predictive maintenance.

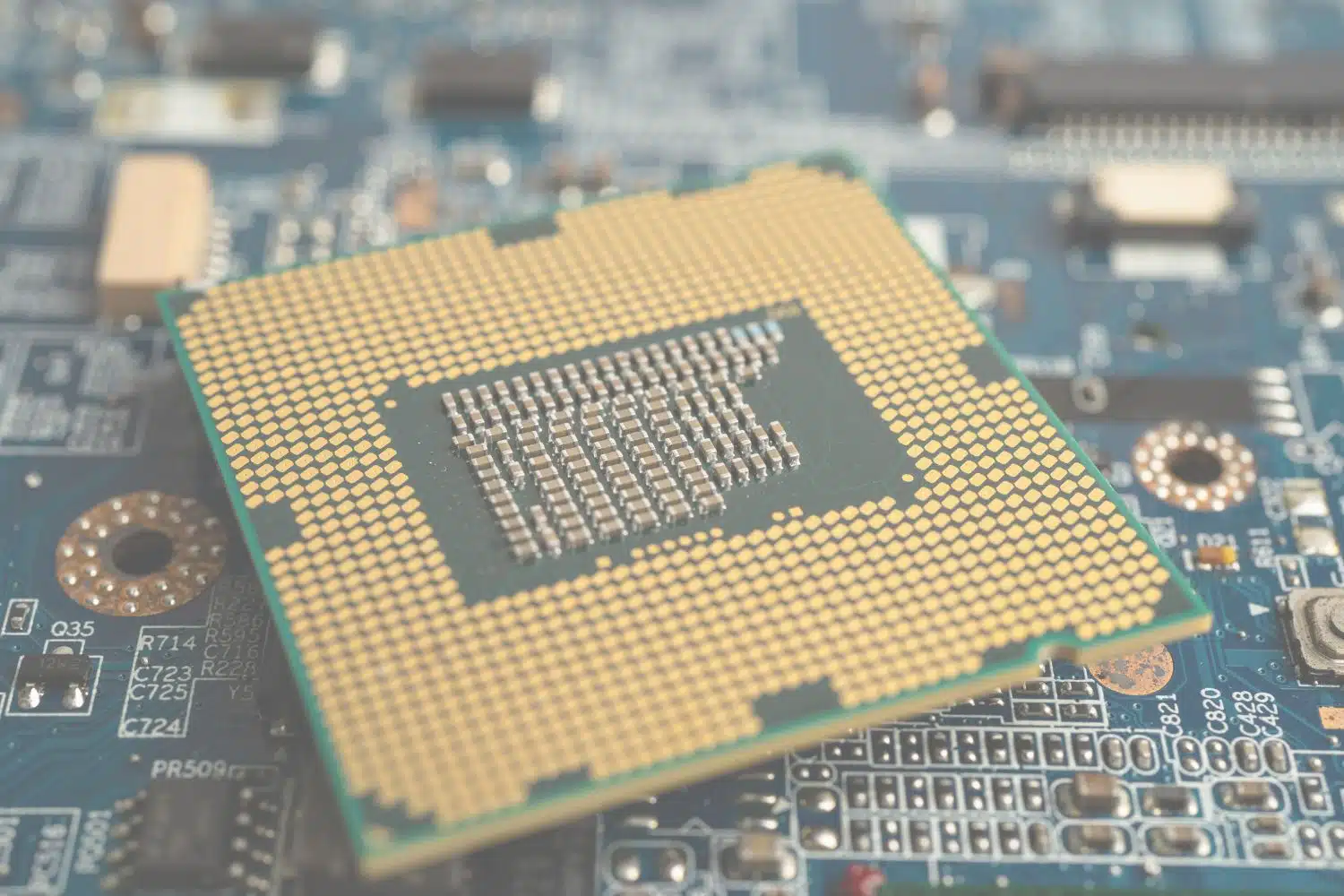

These facts are bringing the cost-benefit ratio of edge AI into the focus of IT specialists and management. Where is it worth running AI workloads and how quickly will investments in special hardware, such as mini PCs, pay off?

The economic evaluation of Edge AI often focuses on the comparison between investment costs and operating costs:

| Cost factor | Cloud solution | Edge AI (local) |

| Investment | Low (devices minimal) | Mini PCs, local AI infrastructure |

| Operating costs | High cloud computing costs & data traffic | Low (hardly any cloud transfer) |

| Bandwidth costs* | High (large amounts of data in the cloud) | Low (data processing on site) |

| IT operation & maintenance | External costs & scaling | Local management, low data transfer |

| Data storage | Permanent storage in the cloud | Selective local storage, low storage requirements |

*Costs incurred for the transfer of data volumes via networks (Internet, cloud services, hosting).

As a result, analyses show that local data processing at the edge can lead to a significant reduction in total operating costs in the long term. Especially where high volumes of data need to be continuously generated and analyzed.

A good edge AI cost-benefit ratio is not only achieved through AI software, but also through the choice of suitable hardware. After all, investments only pay off quickly and sustainably if the edge infrastructure used is powerful, scalable and economical. In practice, our spo-comm solutions show how this balancing act can be achieved - from entry-level to sophisticated AI scenarios.

The CORE5 Ultra represents a robust and compact entry into industrial edge AI. With a modern Intel® Core™ Ultra processor and integrated NPU, this mini PC is ideal for basic inference and automation tasks directly at the data source. It processes sensor data locally and energy-efficiently without a permanent cloud connection and with minimal running costs.

The NOVA R680E offers the necessary performance and expandability for more demanding AI workloads, for example in image processing, predictive maintenance or complex production analyses. Thanks to more powerful CPU options and PCIe expansion options (e.g. GPU accelerator), this industrial PC is able to run compute-intensive models directly at the edge without any data traffic to the cloud and the associated running costs.

Thanks to our spo-comm hardware qualities, strategic advantages such as low latency times, higher data security and noticeable cost savings can be realized. These are all key components of a positive edge AI cost-benefit ratio and should not be neglected. In addition, local processing ensures that companies can react more quickly to production or quality deviations and therefore work more productively.

With the combination of technically mature systems such as the CORE 5 Ultra and the NOVA R680E in combination with a well thought-out Edge AI concept, companies are relying on a basis that is not only economically convincing, but also gives them the flexibility to implement future AI projects efficiently and with our support.

In the world of displays, we constantly come across terms such as HD, Full HD or 4K. They sound tech...

This was our start to the new year: the current memory and CPU situation continues to have a major i...

There is currently a global crisis in the areas of RAM bars and CPUs. What began last summer as a mo...

You need to load content from reCAPTCHA to submit the form. Please note that doing so will share data with third-party providers.

More Information